The combination of brain-computer interfaces and artificial intelligence: applications and challenges

Introduction

With the large explosion in technology, the border between humans and machines has begun to narrow. Our spectacular science fictions describing “mind control” have gradually come true with the help of machines. The frontiers of these new techniques are brain-computer interfaces (BCIs) and artificial intelligence (AI). Experimental paradigms for BCIs and AI were usually developed and applied independently from each other. However, scientists now prefer to combine BCIs and AI, which makes it possible to efficiently use the brain’s electric signals to maneuver external devices (1).

For severely disabled people, the development of BCIs could be the most important technological breakthrough in decades (2). BCIs, which represent technologies designed to communicate with the central nervous system, and neural sensory organs can provide a muscle independent communication channel for people with neurodegenerative diseases, such as amyotrophic lateral sclerosis, or acquired brain injuries (3). The history of BCIs is intimately related to the effort of developing new electrophysiological techniques to record extracellular electrical activity, which is generated by differences in electric potential carried by ions across the membranes of each neuron (4). The methods of detecting different types of brain signals can be classified as invasive or noninvasive (5). Invasive recording systems include electrocorticography (ECoG), microelectrode arrays (MEAs), and so on (6). Noninvasive BCIs including electroencephalography (EEG), magnetoencephalography, functional magnetic resonance imaging (fMRI), and functional near-infrared spectroscopy, do not carry risks of tissue damage and can be implemented rather easily (7). With the help of these electrophysiological techniques, BCIs can be quickly applied to ‘read’ the brain to record its activity and decode its meaning and to ‘write’ to the brain to manipulate activity in specific regions and affect their function (8). However, the development of BCIs has limitations. Although we have obtained much information from multiple extracellular electrodes, this large amount of information cannot be transferred efficiently (9). Neuroscientists cannot unambiguously discern a person’s intentions from the background electrical activity recorded in the brain and match it to the actions of robotic arm (10). The reason for this limitation is that the neural correlates of psychological phenomena are inexact and poorly understood (11). Fortunately, recent advances in AI methodologies have made great strides, verifying that AI outperforms humans in decoding and encoding neural signals (12). This provides AI a great opportunity to be to an ideal helper in processing signals from the brain before they reach the prostheses.

AI is a set of general approaches that uses a computer to model intelligent behavior with minimal human intervention, eventually matches and even surpasses human performance in task-specific applications (13). When AI works within BCIs, internal parameters are provided to the algorithms constantly, such as pulse durations and amplitudes, stimulation frequencies, energy consumption by the device, stimulation or recording densities, and electrical properties of the neural tissues (14). After receiving the information, AI algorithms can identify useful parts and logic in the data and then simultaneously produce the desired functional outcomes (15). Although these studies remain largely in the preclinical research domain, the continued development may highlight clinically actionable changes in BCIs.

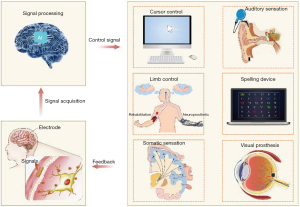

At the dawn of technological transformation, a tendency to combine BCIs and AI has also attracted our attention. Here, we review current applications with a focus on the state of BCIs, the role that AI plays and future directions of BCIs based on AI (Figure 1).

Applications of BCIs based on AI

Applications in cursor control

Early studies focused on the control of personal computer mouse cursors for paralyzed patients through BCIs with high feasibility (16,17). The fundamental components of a cursor control BCI include a sensor to record neural signals, a decoder to interpret movement intentions, and a computer cursor that interacts with the external environment (18).

A pioneering study published in 2000 by Kennedy and colleagues first demonstrated that an invasive BCI device with a special electrode implanted into the outer layers of the human neocortex could be decoded to drive a cursor on a computer monitor (19). Studies in nonhuman primates have shown that cursor control BCIs can achieve multidimensional neural integration with two or more degrees of freedom (20). One-dimensional (1D) cursor control is achievable using EEG with event-related desynchronization, using decision trees sequentially string selections together to make a final choice (21,22). Two-dimensional (2D) cursor control can be achieved using techniques such as fMRI or EEG (23). Recent work in 2017 reported the development of a high-performance, invasive BCI for communication, using two algorithms to translate signals into point-and-click commands: the ReFIT Kalman Filter for continuous two-dimensional cursor control and a Hidden Markov Model-based state classifier for clicking (24). By providing at least 2D neural control of the computer cursor and a parallel selection method such as a click, the user can not only type in self-selected characters but also use native computer application with the cursors, just like a healthy person can with a mouse (25). Moreover, this BCI improved the communication rate to 32 letters/min, making cursor control more efficient (26).

On the basis of scalp EEG, ECoG and synchronous evoked potentials, many BCI systems for cursor control have been developed, such as the P300 matrix speller and the rapid serial visual presentation method (27). The Brain Gate group conducted the first human trial of a motor BCI starting in June 2004, which recorded signals from a Blackrock 96-channel MEA implanted in the arm area of M1 in a patient with tetraplegia following cervical spinal cord injury (28). They achieved two-dimensional movement of a cursor on a screen and subsequently used this “neural cursor” to direct the movement of a robotic limb (28).

Applications in neuroprosthetics and limb rehabilitation

The task complexity of BCI studies rapidly has advanced from 2D and 3D control of a cursor on a computer screen (29) to the control of more natural behaviors, such as reaching and grasping (30), self-feeding (31), and bimanual arm movements (32). To observe a person with tetraplegia picking up a cup of coffee using a BCI-controlled robotic arm is spectacular. This rapidly advancing technology works by implanting an array of electrodes either on or in a person’s motor cortex, which is the brain region involved in planning and executing movements (33). Then the activity of the brain is recorded while the individual engages in cognitive tasks, such as imagining that they are moving their hand, and is used to command a robotic limb (33).

In addition, many therapeutic strategies have been developed to help stroke patients regain some function in the affected limb. However, approximately 80% of all stroke survivors with upper limb motor deficits do not benefit from these approaches (34). The method of lower limb rehabilitation using BCIs has been explored recently (35). The overall principle is thought to be that closing the loop between the cortical activity of motor intention and movement, thereby producing afferent feedback activity, might restore functional corticospinal and corticomuscular connections (36).

Early researchers of neuroprosthetics implanted electrodes into a monkey’s brain and measured the signals from the electrodes. The monkey used a joystick to control a robotic arm. Eventually, the researchers changed the controls so that the robotic arm was being controlled only by the signals coming from the electrodes, not the joystick (37). Invasive and noninvasive BCI systems have also been used to enable neural control of a robotic arm in humans (38,39). The use of implantable electrodes allows patients to control movements with several degrees of freedom, enabling them to make more complex and functional movements. However, approaches that use noninvasive systems provide limited control, and most complex movements rely on the AI of the robot (40). To classify motor-related signals specifically for BCI applications, Nurse and colleagues developed a generalized approach that takes advantage of a stochastic machine-learning method (41). Importantly, their classifier does not need to rely on the use of extensive a priori data to train the BCI. Their algorithms outperformed other methods on the Berlin BMI IV 2008 dataset and demonstrated high levels of classification accuracy when tested on datasets derived from EEG signals (41).

In addition, studies have shown that the use of BCIs can be effective in chronic stroke motor rehabilitation and have demonstrated that stroke patients without residual movement benefit from BCIs for their cortical and subcortical reorganization including functional and structural connectivity (42). The learning of neuroprosthetic control has been shown to reshape cortical networks and trigger large-scale modifications of the cortical network, even in perilesional areas (43). Machine learning algorithms can make more gradual decoder modifications (44) and produce a robust neuroprosthetic performance that can be maintained despite nonstationary neural inputs and changes in context (45). The development of AI and computer deep learning will provide a new way to decode neural signals with extremely fast, never before-seen speeds.

Applications in somatosensation

For treating patients with paralysis, movements are highly dependent on somatosensory feedback, especially proprioceptive and tactile feedback (46). Mechanoreceptive afferent signals in our skin convey information about the location of contacts (47) as well as the forces exerted on the skin when we grasp an object (48). Loss of proprioception largely eliminates the ability to plan the dynamics of limb movements (49). Given the importance of somatosensation, the development of bidirectional BCIs is essential. Activation of sensors on the prosthesis could be transferred to activate neurons with the corresponding receptive fields (36). We can explore the link between the pressure exerted on objects by the prosthesis and the appropriate magnitude of intracortical microstimulation (ICMS) pulses more efficiently with the help of AI (50). Random forests in noninvasive sensorimotor rhythm BCIs have been shown to be practical and convenient nonlinear classifiers (51). In addition, somatosensory evoked potential-based BCIs have been extensively investigated using the Fukunaga-Koontz transform-based feature extraction method with a performance improvement from 70% to 75% (52).

One approach to restore somatosensation through BCIs is studying relevant natural somatosensory coding with the help of recorded systems such as ICMS and attempting to mimic the nervous stimulation of healthy people (36,53). Tucker Tomlinson and Lee E. Miller observed that a monkey’s perception was altered in a predictable manner by delivering ICMS coincidently with force pulses applied to a monkey’s hand (54). However, the perception was not accurate enough because it is currently impossible to activate large numbers of neurons individually and independently (54). More combined technologies are needed before we can achieve restoration of the somatosensation of humans with BCIs.

Applications in auditory sensation

The most common and oldest way to use a BCI is a cochlear implant (55). The cochlear implant is a successful example of an afferent interface, used to restore hearing in over 200,000 patients worldwide according to the U.S. Food and Drug Administration since December 2010 (

However, even if cochlear implantation enables some children to have restored hearing and attain age-appropriate speech development after sensory loss, the outcomes are highly variable between patients and are difficult to predict (58). A computational model combined with a complete finite element model and synthetic structures has been designed to provide the missing information linking each of the surgical variables to their effect on the excitation of the nerves based on µ computed tomography images. The metric for the usefulness of the stimulation protocol was calculated and used to rerun the simulations with better parameters (59). A random-forest regression model using clinical data (e.g., duration of deafness and age at surgery) was applied for predicting word recognition scores in postlingually deaf adults with a high accuracy of 95.2% (60). Presurgical neural morphological data obtained from fMRI have been used in linear multivariate ranking support vector machines for building and validating predictive models of speech-perception improvement after surgery (61). The findings suggest that neural systems that are unaffected by auditory deprivation best predicted postsurgical speech-perception outcomes (61).

Applications in speech synthesizers

Patients with tetraplegia and anarthria can communicate in real-time with the help of neural point-and-click control derived from intracortical neural activity (62). With the development and proliferation of mobile communication equipment such as mobile phones, tablet, and other touch-screen devices, researchers have developed many kinds of virtual keyboards with more efficient text entry capabilities, including DASHER, which is driven by gestures and has been tested as a BCI communication equipment based on one-dimensional EEG control (63). Such devices interpret neural activity that occurs while people silently mouth words and then use this information to generate synthetic speech sounds (64).

Locked-in patients with amyotrophic lateral sclerosis can control the variations in their slow cortical potentials to operate an electronic spelling device at a rate of approximately 2 characters per minute (65). To further improve the communication rate, techniques for decoding speech directly from the cortex should be explored. One study examined the potential to decode words from an ECoG recorded from Wernicke’s area, but the accuracy was modest (66). In a recent study, researchers used a two-stage decoder to accurately reconstruct a speech spectrogram (67). Stage 1 of the decoder is a bidirectional long short-term memory (bLSTM) recurrent neural network, decoding articulatory kinematic features from continuous neural activity recorded from the ventral sensorimotor cortex, superior temporal gyrus, and inferior frontal gyrus. Stage 2 is a separate bLSTM for decoding acoustic features from the decoded articulatory features from stage 1. Then, the audio signal is synthesized from the decoded acoustic features (67). Unlike using a cursor to spell, transferring neural activity into speech directly is more efficient (64). The strategy may be an important next step in realizing speech restoration for patients with paralysis.

However, the speed of communication using this electronic spelling device is much slower than normal speech (64). Alternatively, patients with even minimal muscle control still preferred communicating with more conventional assistive devices such as eye gaze trackers or binary switches that can provide higher communication rates (67). Even so, the two-stage bLSTM-based decoder has been a breakthrough in brain-computer interfacing.

Applications in optical prosthetics

Visual prosthetic development has one of the highest priorities in the biomedical engineering field (68). Complete blindness from retinal degeneration arises from diseases such as Leber’s congenital amaurosis or age-related macular degeneration, which causes dystrophy of photoreceptor cells (69). The procedure of visual prostheses can be described as follows. First, the prosthesis detects light emanated from sources or reflected from surfaces in the physical environment of the implant patient. Second, the light is transduced into an artificial stimulus. Third, the artificial stimulus is delivered to the retina and evokes a response (70). Rudimentary vision can be achieved by converting images into binary pulses of electrical signals and delivering them to the visual cortex (70).

For visual prosthesis design, the electrode array is critical. Prosthesis electrode arrays need to adapt to different optimal stimulus locations, stimulus patterns, and patient disease states (71). To find the best prosthesis electrode array for a patient, BCIs based on AI are indispensable. A new electrical stimulation tuning system constructed with a generalized nonlinear model framework and autoencoder has been developed and was suggested to estimate electrical stimuli equivalent to a given natural visual stimulus when introduced to lateral geniculate nucleus cells (72). A new kind of thin, flexible multielectrode polymer probe and a robotic insertion approach for inserting flexible probes were developed by Neuralink (73). This technology is suitable for visual prosthesis and may provide ideal retinal stimulating methods that have the flexibility to match the curvature of the retina without placing significant mechanical pressure on the retina (74).

However, there are things to note about the development of visual prostheses. To date, sensations created have been in the form of bright spots referred to as phosphenes or visual perception patterns (75). Artificial neural networks which are powerful learning tools that provide a great deal of flexibility and scalability may provide new methods for mimicking the natural visual system.

Discussion and conclusions

The present review highlights current research in the BCI field based on AI, which has grown rapidly over the last 15 years (76-78). The combination of BCIs and AI offers a powerful way to investigate brain function by providing direct knowledge and control of neurons controlling behavior, which will help scientists know more about the human brain and promote developments in rehabilitation medicine (8). One of the biggest advantages machine learning may confer on BCI is the ability to achieve real-time or near-real-time modulation of training parameters and subsequent adjustments in response to active real-time feedback (46). Furthermore, algorithms learn from previous data and guide users towards decisions on the basis of what they have done in the past (79). Patients and healthy subjects alike often show large variability, or even inability, of brain self-regulation for BCI control, known as BCI illiteracy (79). Adaptive machine learning methods can help participants who suffer from BCI illiteracy to gain control of the system, combining supervised techniques and unsupervised adaptation (80).

Despite the reported successes and breakthroughs in this field, there still exist some problems. First, most studies have focused on the recovery of motor ability, and the use of BCIs and AI for cognitive training is still at a very early stage (81). Second, clinical BCI applications are still very limited, and some important issues need to be solved before BCIs could be considered effective systems for rehabilitation in clinical settings. For example, stimulating electrodes with smaller diameters are needed (82). Third, machine learning algorithms learn to analyze data by generating algorithms that can rarely be predicted and comprehended in the real world, which leads to problems of unknown process between a person’s thoughts and the technology acting on their behalf (83).

As technologies that directly integrate the brain with computers become unprecedentedly complex, various ethical and social challenges that merit further examination and discussion will also arise. For instance, some forms of BCIs are likely to be expensive, posing questions of affordability and feasibility for people with severe disabilities to access them as assistive technology (84). In addition, BCIs with a decision-making device into human’s brain with AI software that autonomously adapts its operations raise questions about human autonomy by (85). Brain information as digitally stored neural data can also be exploited by others with sufficient computational power to make inferences about our memory, intentions, conscious and unconscious interests, and emotional reactions (86). Moreover, reports have surfaced about a minority of people who undergo deep-brain stimulation for Parkinson’s disease becoming hypersexual, or developing other impulse-control issues (85,86).

In conclusion, BCI based on AI is a rapidly advancing field of interdisciplinary integration of medicine, neuroscience, and engineering. Although most studies that have evaluated AI applications in BCIs to date have not been vigorously validated for reproducibility and generalizability, the goal of these devices is to improve the level of function and quality of life for people with paralysis, spinal cord injury, amputation, acquired blindness, deafness, memory deficits, and other neurological disorders. The capability to enhance normal motor, sensory or cognitive function is also emerging and will require careful regulation and control. Further technical development of BCIs, clinical trials and regulatory approval will be required before there is widespread introduction of these devices into clinical practice. The development of this technology must trigger a revolution in medicine.

Acknowledgments

Funding: This study was funded by the National Key R&D Program of China (2018YFC0116500) and the National Natural Science Foundation of China (81770967, 81822010). The funders had no role in study design, interpretation, and writing of the paper.

Footnote

Provenance and Peer Review: This article was commissioned by the Guest Editors (Haotian Lin and Limin Yu) for the series “Medical Artificial Intelligent Research” published in Annals of Translational Medicine. The article was sent for external peer review organized by the Guest Editors and the editorial office.

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/atm.2019.11.109). The series “Medical Artificial Intelligent Research” was commissioned by the editorial office without any funding or sponsorship. HL served as the unpaid Guest Editor of the series. The other authors have no other conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Bell CJ, Shenoy P, Chalodhorn R, et al. Control of a humanoid robot by a noninvasive brain-computer interface in humans. J Neural Eng 2008;5:214-20. [Crossref] [PubMed]

- Lee MB, Kramer DR, Peng T, et al. Brain-Computer Interfaces in Quadriplegic Patients. Neurosurg Clin N Am 2019;30:275-81. [Crossref] [PubMed]

- Kübler A, Kotchoubey B, Hinterberger T, et al. The thought translation device: a neurophysiological approach to communication in total motor paralysis. Exp Brain Res 1999;124:223-32. [Crossref] [PubMed]

- Birbaumer N, Weber C, Neuper C, et al. Physiological regulation of thinking: brain-computer interface (BCI) research. Prog Brain Res 2006;159:369-91. [Crossref] [PubMed]

- Bamdad M, Zarshenas H, Auais MA. Application of BCI systems in neurorehabilitation: a scoping review. Disabil Rehabil Assist Technol 2015;10:355-64. [Crossref] [PubMed]

- Caldwell DJ, Ojemann JG, Rao R. Direct Electrical Stimulation in Electrocorticographic Brain-Computer Interfaces: Enabling Technologies for Input to Cortex. Front Neurosci 2019;13:804. [Crossref] [PubMed]

- Slutzky MW, Flint RD. Physiological properties of brain-machine interface input signals. J Neurophysiol 2017;118:1329-43. [Crossref] [PubMed]

- Slutzky MW. Brain-Machine Interfaces: Powerful Tools for Clinical Treatment and Neuroscientific Investigations. Neuroscientist 2019;25:139-54. [Crossref] [PubMed]

- Baranauskas G. What limits the performance of current invasive brain machine interfaces. Front Syst Neurosci 2014;8:68. [Crossref] [PubMed]

- Athanasiou A, Xygonakis I, Pandria N, et al. Towards Rehabilitation Robotics: Off-the-Shelf BCI Control of Anthropomorphic Robotic Arms. Biomed Res Int 2017;2017:5708937.

- Collinger JL, Gaunt RA, Schwartz AB. Progress towards restoring upper limb movement and sensation through intracortical brain-computer interfaces. Curr Opin Biomed Eng 2018. [Crossref]

- Li JH, Yan YZ. Improvement and Simulation of Artificial Intelligence Algorithm in Special Movements. Applied Mechanics & Materials 2014;513-517:2374-8. [Crossref]

- Patel VL, Shortliffe EH, Stefanelli M, et al. Position paper: The coming of age of artificial intelligence in medicine. Artif Intell Med 2009;46:5-17. [Crossref] [PubMed]

- Silva GA. A New Frontier: The Convergence of Nanotechnology, Brain Machine Interfaces, and Artificial Intelligence. Front Neurosci 2018;12:843. [Crossref] [PubMed]

- Li M, Yan C, Hao D, et al. An adaptive feature extraction method in BCI-based rehabilitation. Journal of Intelligent & Fuzzy Systems Applications in Engineering & Technology 2015;28:525-35. [Crossref]

- Serruya MD, Hatsopoulos NG, Paninski L, et al. Instant neural control of a movement signal. Nature 2002;416:141-2. [Crossref] [PubMed]

- Taylor DM, Tillery SI, Schwartz AB. Direct cortical control of 3D neuroprosthetic devices. Science 2002;296:1829-32. [Crossref] [PubMed]

- Daly JJ, Wolpaw JR. Brain-computer interfaces in neurological rehabilitation. Lancet Neurol 2008;7:1032-43. [Crossref] [PubMed]

- Kennedy PR, Bakay RA, Moore MM, et al. Direct control of a computer from the human central nervous system. IEEE Trans Rehabil Eng 2000;8:198-202. [Crossref] [PubMed]

- Wolpaw JR, McFarland DJ. Control of a two-dimensional movement signal by a noninvasive brain-computer interface in humans. Proc Natl Acad Sci U S A 2004;101:17849-54. [Crossref] [PubMed]

- Chatterjee A, Aggarwal V, Ramos A, et al. A brain-computer interface with vibrotactile biofeedback for haptic information. J Neuroeng Rehabil 2007;4:40. [Crossref] [PubMed]

- Kayagil TA, Bai O, Henriquez CS, et al. A binary method for simple and accurate two-dimensional cursor control from EEG with minimal subject training. J Neuroeng Rehabil 2009;6:14. [Crossref] [PubMed]

- Sagara K, Kido K, Ozawa K. Portable single-channel NIRS-based BMI system for motor disabilities' communication tools. Conf Proc IEEE Eng Med Biol Soc 2009;2009:602-5.

- Pandarinath C, Nuyujukian P, Blabe CH, et al. High performance communication by people with paralysis using an intracortical brain-computer interface. Elife 2017. [Crossref] [PubMed]

- Baek HJ, Chang MH, Heo J, et al. Enhancing the Usability of Brain-Computer Interface Systems. Comput Intell Neurosci 2019;2019:5427154.

- Pandarinath C, Nuyujukian P, Blabe CH, et al. High performance communication by people with paralysis using an intracortical brain-computer interface. eLife 2017;6:e18554. [Crossref] [PubMed]

- Wolpaw JR, Birbaumer N, McFarland DJ, et al. Brain-computer interfaces for communication and control. Clin Neurophysiol 2002;113:767-91. [Crossref] [PubMed]

- Bacher D, Jarosiewicz B, Masse NY, et al. Neural Point-and-Click Communication by a Person With Incomplete Locked-In Syndrome. Neurorehabil Neural Repair 2015;29:462-71. [Crossref] [PubMed]

- Serruya MD, Hatsopoulos NG, Paninski L, et al. Brain-machine interface: Instant neural control of a movement signal. Nature 2002;416:141-2. [Crossref] [PubMed]

- Carmena JM, Lebedev MA, Crist RE, et al. Learning to Control a Brainâ “Machine Interface for Reaching and Grasping by Primates. PLoS Biol 2003;1:E42. [Crossref] [PubMed]

- Velliste M, Perel S, Spalding MC, et al. Cortical control of a prosthetic arm for self-feeding. Nature 2008;453:1098-101. [Crossref] [PubMed]

- Moxon KA, Foffani G. Brain-machine interfaces beyond neuroprosthetics. Neuron 2015;86:55-67. [Crossref] [PubMed]

- Clanton ST. Brain-Computer Interface Control of an Anthropomorphic Robotic Arm. Dissertations & Theses - Gradworks 2011.

- Hendricks HT, van Limbeek J, Geurts AC, et al. Motor recovery after stroke: a systematic review of the literature. Arch Phys Med Rehabil 2002;83:1629-37. [Crossref] [PubMed]

- Wolpaw JR, Birbaumer N. Textbook of Neural Repair and Rehabilitation: Brain-computer interfaces for communication and control. Nat Rev Neurol 2017;12:513.

- Bensmaia SJ, Miller LE. Restoring sensorimotor function through intracortical interfaces: progress and looming challenges. Nat Rev Neurosci 2014;15:313-25. [Crossref] [PubMed]

- Farwell LA, Donchin E. Talking off the top of your head: toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr Clin Neurophysiol 1988;70:510-23. [Crossref] [PubMed]

- Hochberg LR, Daniel B, et al. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature 2012;485:372-5. [Crossref] [PubMed]

- Collinger JL, Wodlinger B, Downey JE, et al. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet 2013;381:557-64. [Crossref] [PubMed]

- Hübner D, Verhoeven T, Schmid K, et al. Learning from label proportions in brain-computer interfaces: Online unsupervised learning with guarantees. PLoS One 2017;12:e0175856. [Crossref] [PubMed]

- Nurse ES, Karoly PJ, Grayden DB, et al. A Generalizable Brain-Computer Interface (BCI) Using Machine Learning for Feature Discovery. PLoS One 2015;10:e0131328. [Crossref] [PubMed]

- Ramos-Murguialday A, Broetz D, Rea M, et al. Brain-machine interface in chronic stroke rehabilitation: a controlled study. Ann Neurol 2013;74:100-8. [Crossref] [PubMed]

- Ganguly K, Dimitrov DF, Wallis JD, et al. Reversible large-scale modification of cortical networks during neuroprosthetic control. Nat Neurosci 2011;14:662-7. [Crossref] [PubMed]

- Danziger Z, Fishbach A, Mussa-Ivaldi FA. Learning Algorithms for Human–Machine Interfaces. IEEE Trans Biomed Eng 2009;56:1502-11. [Crossref] [PubMed]

- Orsborn AL, Moorman HG, Overduin SA, et al. Closed-loop decoder adaptation shapes neural plasticity for skillful neuroprosthetic control. Neuron 2014;82:1380-93. [Crossref] [PubMed]

- Suminski AJ, Tkach DC, Fagg AH, et al. Incorporating feedback from multiple sensory modalities enhances brain-machine interface control. J Neurosci 2010;30:16777. [Crossref] [PubMed]

- Ochoa J, Torebjörk E. Sensations evoked by intraneural microstimulation of single mechanoreceptor units innervating the human hand. J Physiol 1983;342:633-54. [Crossref] [PubMed]

- Goodwin AW, Wheat HE. Sensory signals in neural populations underlying tactile perception and manipulation. Annu Rev Neurosci 2004;27:53. [Crossref] [PubMed]

- Sainburg RL, Ghilardi MF, Poizner H, et al. Control of limb dynamics in normal subjects and patients without proprioception. J Neurophysiol 1995;73:820-35. [Crossref] [PubMed]

- Müller-Putz GR, Scherer R, Neuper C, et al. Steady-state somatosensory evoked potentials: suitable brain signals for brain-computer interfaces. IEEE Trans Neural Syst Rehabil Eng 2006;14:30-7. [Crossref] [PubMed]

- Steyrl D, Scherer R, Faller J, et al. Random forests in non-invasive sensorimotor rhythm brain-computer interfaces: a practical and convenient non-linear classifier. Biomed Tech (Berl) 2016;61:77-86. [Crossref] [PubMed]

- Nam Y, Cichocki A, Choi S. Common spatial patterns for steady-state somatosensory evoked potentials 2013.

- Berg JA, Tenore FV, Tabot GA, et al. Behavioral demonstration of a somatosensory neuroprosthesis. IEEE Trans Neural Syst Rehabil Eng 2013;21:500-7. [Crossref] [PubMed]

- Tomlinson T, Miller LE. Toward a Proprioceptive Neural Interface that Mimics Natural Cortical Activity. Adv Exp Med Biol 2016;957:367-88. [Crossref] [PubMed]

- Peters BR, Wyss JM. Worldwide trends in bilateral cochlear implantation. Laryngoscope 2010;120:S17-S44. [Crossref] [PubMed]

- Bilateral Cochlear Implantation. A Health Technology Assessment. Ont Health Technol Assess Ser 2018;18:1-139.

- Konrad P, Shanks T. Implantable brain computer interface: Challenges to neurotechnology translation. Neurobiol Dis 2010;38:369-75. [Crossref] [PubMed]

- Wong DJ, Moran M, O'Leary SJ. Outcomes After Cochlear Implantation in the Very Elderly. Otol Neurotol 2016;37:46. [Crossref] [PubMed]

- Ceresa M, Mangado N, Andrews RJ, et al. Computational Models for Predicting Outcomes of Neuroprosthesis Implantation: the Case of Cochlear Implants. Mol Neurobiol 2015;52:934-41. [Crossref] [PubMed]

- Kim H, Kang WS, Park HJ, et al. Cochlear Implantation in Postlingually Deaf Adults is Time-sensitive Towards Positive Outcome: Prediction using Advanced Machine Learning Techniques. Sci Rep 2018;8:18004. [Crossref] [PubMed]

- Feng G, Ingvalson EM, Grieco-Calub TM, et al. Neural preservation underlies speech improvement from auditory deprivation in young cochlear implant recipients. Proc Natl Acad Sci U S A 2018;115:E1022-E1031. [Crossref] [PubMed]

- Simeral JD, Kim SP, Black MJ, et al. Neural control of cursor trajectory and click by a human with tetraplegia 1000 days after implant of an intracortical microelectrode array. J Neural Eng 2011;8:025027. [Crossref] [PubMed]

- Wills SA, Mackay DJ. DASHER--an efficient writing system for brain-computer interfaces. IEEE Trans Neural Syst Rehabil Eng 2006;14:244. [Crossref] [PubMed]

- Brumberg JS, Nieto-Castanon A, Kennedy PR, et al. Brain-Computer Interfaces for Speech Communication. Speech Commun 2010;52:367-79. [Crossref] [PubMed]

- Birbaumer N, Ghanayim N, Hinterberger T, et al. A spelling device for the paralysed. Nature 1999;398:297-8. [Crossref] [PubMed]

- Kellis S, Kai M, Thomson K, et al. Decoding spoken words using local field potentials recorded from the cortical surface. J Neural Eng 2010;7:056007. [Crossref] [PubMed]

- Anumanchipalli GK, Chartier J, Chang EF. Speech synthesis from neural decoding of spoken sentences. Nature 2019;568:493. [Crossref] [PubMed]

- Herrera-Rincon C, Panetsos F. Substitution of natural sensory input by artificial neurostimulation of an amputated trigeminal nerve does not prevent the degeneration of basal forebrain cholinergic circuits projecting to the somatosensory cortex. Front Cell Neurosci 2014;8:385. [Crossref] [PubMed]

- Shoval A, Adams C, David-Pur M, et al. Carbon nanotube electrodes for effective interfacing with retinal tissue. Front Neuroeng 2009;2:4. [Crossref] [PubMed]

- Weiland JD, Humayun MS. Retinal prosthesis. IEEE Trans Biomed Eng 2014;61:1412-24. [Crossref] [PubMed]

- Cohen ED. Prosthetic interfaces with the visual system: biological issues. J Neural Eng 2007;4:R14-R31. [Crossref] [PubMed]

- Jawwad A, Abolfotuh HH, Abdullah B, et al. Modulating Lateral Geniculate Nucleus Neuronal Firing for Visual Prostheses: A Kalman Filter-based Strategy. IEEE Trans Neural Syst Rehabil Eng 2017;25:1917-27. [Crossref] [PubMed]

- Musk E. Neuralink. An integrated brain-machine interface platform with thousands of channels. J Med Internet Res 2019;21:e16194. [Crossref] [PubMed]

- Hesse L, Schanze T, Wilms M, et al. Implantation of retina stimulation electrodes and recording of electrical stimulation responses in the visual cortex of the cat. Graefes Arch Clin Exp Ophthalmol 2000;238:840-5. [Crossref] [PubMed]

- Panetsos F, Cerio ED, Sanchezjimenez A, et al. Thalamic visual neuroprostheses: Comparison of visual percepts generated by natural stimulation of the eye and electrical stimulation of the thalamus. 4th International IEEE/EMBS Conference on Neural Engineering 2009.

- Shyu Kuo-Kai, Lee Po-Lei, Lee Ming-Huan, et al. Development of a Low-Cost FPGA-Based SSVEP BCI Multimedia Control System. IEEE Trans Biomed Circuits Syst 2010;4:125-32. [Crossref] [PubMed]

- Marathe AR, Lawhern VJ, Wu D, et al. Improved Neural Signal Classification in a Rapid Serial Visual Presentation Task Using Active Learning. IEEE Trans Neural Syst Rehabil Eng 2016;24:333-43. [Crossref] [PubMed]

- Ortega P, Colas C, Faisal A. Compact Convolutional Neural Networks for Multi-Class, Personalised, Closed-Loop EEG-BCI. arXiv 2018;1807:11752.

- Bauer R, Gharabaghi A. Reinforcement learning for adaptive threshold control of restorative brain-computer interfaces: a Bayesian simulation. Front Neurosci 2015;9:36. [Crossref] [PubMed]

- Vidaurre C, Sannelli C, Müller KR, et al. Machine-Learning-Based Coadaptive Calibration for Brain-Computer Interfaces. Neural Comput 2011;23:791-816. [Crossref] [PubMed]

- Kozma R, Freeman WJ. On Neural Substrates of Cognition: Theory, experiments and application in brain computer interfaces. Proceedings of the 2014 Biomedical Sciences and Engineering Conference 2014.

- Birbaumer N, Ramos Murguialday A, Weber C, et al. Neurofeedback and brain-computer interface clinical applications. Int Rev Neurobiol 2009;86:107-17. [Crossref] [PubMed]

- Lee MB, Kramer DR, Peng T, et al. Clinical neuroprosthetics: Today and tomorrow. J Clin Neurosci 2019;68:13-9. [Crossref] [PubMed]

- Burwell S, Sample M, Racine E. Ethical aspects of brain computer interfaces: a scoping review. BMC Med Ethics 2017;18:60. [Crossref] [PubMed]

- Drew L. The ethics of brain-computer interfaces. Nature 2019;571:S19. [Crossref] [PubMed]

- Bonaci T, Calo R, Chizeck HJ. App Stores for the Brain: Privacy & Security in Brain-Computer Interfaces. Technology & Society Magazine IEEE 2014;34:32-9. [Crossref]